Two thousand families and young people are suing social media companies such as Meta, Snap and ByteDance for the damage that apps such as Instagram, Snapchat and TikTok have wrought on their children. They are going up against some of the most powerful companies in the world. Here’s why they’re doing it:

What age were you when you first got access to social media? Were you thirteen, as the age restrictions suggest? Or did you lie, able to easily cheat a system based on honesty so that you could join in with your friends?

Either way, it’s likely that most of you encountered social media before the age of eighteen. Which means you’re part of the generation which is most affected, and most harmed by it.

TikTok’s have abounded online about the dangers of social media for children, warning parents of the risks.

In addition, 2,000 families are suing social media companies due to this harm. 350 of the 2,000 are scheduled for 2024. Below are some of the charges and stories from the plaintiffs, with some shocking statistics to support them.

Harmful Content on Social Media

One of the main claims of the lawsuits is the lack of adequate protections for children using social media networks.

Credit: Shutterstock/ Jelena Stanojkovic

A survey of teens in the United Kingdom from March 2022 found that an average of 15% of respondents had seen sexualized images on TikTok, and 12% had seen violent and gory images. 8% had seen pornography, and 7% had seen content relating to dietary restriction. On Instagram, 12% had seen sexualized images, and 12% saw violent and gory images. 7% had seen pornography, and 10% saw images of dietary restriction.

These are all things that are against Instagram and TikTok’s code of conduct for users. They can also lead to mental health problems when seen online, especially when encountered by young and developing brains, including eating disorders, porn addictions and self-harming tendencies.

However, prior to now, a section of 1996 US law has shielded such companies from culpability for third-party material uploaded on their sites. Section 230, or the Communications Decency Act, absolves companies of responsibility for any illegal material on their websites.

The Social Media Victims Law Centre is a legal practice which dedicated to cases of parents wishing to sue social media companies. Mathew P. Bergman, its founding attorney, has had to pivot to Product Liability law when suing these companies.

Credit: Shutterstock/ARMMY PICCA

That is, instead of blaming sites for the content on them, they need to prove that the products (i.e., the apps themselves) are not fit for purpose.

The Dangers of Social Media Algorithms

A key way in which the lawyers are building a case against these companies is by attacking social media algorithms. These mean that you’ll continually see content that you’re interested in and are likely to enjoy.

However, such algorithms put social media’s younger users at risk. If a young child likes or comments on a video that may have harmful content on it, they will continue to see similar videos. It also can reshape a child’s and an adult’s worldview. For example, it can make alternative medicine appear as the only acceptable cure for illness through “crunchytok” videos.

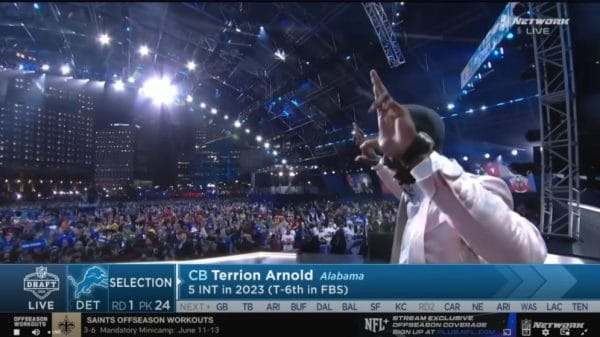

One of the plaintiffs is 20 year old Alexis Spence. She has alleged that she joined Instagram at age eleven, easily able to bypass age restrictions, and quickly encountered content promoting anorexia. This led to her developing an eating disorder at the age of 12.

Credit: Shutterstock/ Ground Picture

She says, “it started as, like, fitness stuff. And then I guess that would spark the algorithm to show me diets. It then started to shift into eating disorders.”

She also said that because actions such as taking diet pills were prevalent on her social media feed, they became normal to her.

Similar phenomena have occurred to young people when they encounter self-harm content, such as in the case of Taylor Little. Little is 21, uses they/them pronouns, and, like Spence, is suing social media companies. They allege that they were first shown a graphic self-harm picture online at the age of 11. They say that they can “still see it”, even ten years later.

They say that they are determined to win against social media companies, as “they know we’re dying. They don’t care. They make money off us dying.”

Addictiveness

Credit: Shutterstock/ InFocus.ee

A key issue for Little is also the addictive nature of social media, which both algorithms and the rise of short-form video content has fed into. They say that they were “trapped by my addiction at age 12. And I did not get my life back for all of my teenage years.”

Studies have shown that social media can be as addictive as cocaine. In 2023, a survey done by UCL found that 48% of British teenagers think they are addicted to social media. Such addiction can impact children’s neurological development with extreme usage and even harm adults.

See it for yourself. An online challenge last December was to see who could watch a one-and-a-half-minute TikTok video without distractions. If you’re a regular TikTok user, the results will shock you.

Addiction and coming across harmful content can also intersect concerningly. In February 2020, another UK study found that 87% of children who were on social media for over ten hours a day had seen harmful content in the past year. This is compared to 76% who used it for two hours or less, which is still extraordinarily high.

Disproportionately Affecting Vulnerable Groups

Worryingly, teens from vulnerable groups are more likely to come across harmful content than their peers, according to recent studies,

A U.S. study of 11-15 year old girls found that 75% of those surveyed who experienced depressive symptoms had come across material related to suicide or self-harm on Instagram. In comparison, 26% of their peers had seen such content.

Credit: Shutterstock/ Antonio Guillem

The same study also uncovered the fact that 43% of overall respondents had seen harmful eating disorders on TikTok and 37% on Instagram. These are also two of the most used apps by British and American teenagers.

A U.K. study from 2022 discovered that socio-economic status might impact the likelihood of children encountering harmful material online. Children on Free School Meals, which you are eligible for if your parents have some form of benefits from the government, were more likely to see harmful content.

They were twice as likely to encounter self-harming material than their peers. These children also had almost double the chance of seeing images of diet restriction and sexualized images. Finally, they were also almost three times more likely to have seen pornography online.

Who’s Responsible For Harm To Children On Social Media?

In 2018, 89% of respondents to a survey on who was responsible for child smartphone use said parents and caregivers. Only 1% said companies who make apps.

In 2023, a similar study set out to investigate who was responsible for preventing social media from harming children. 51% of those surveyed said parents, whilst 19% said social media companies and 17% said Federal Governments.

Credit: Shutterstock/DaisyDaisy

Over the past five years, blame for harmful social media usage in children has shifted away from parents. As internal investigations in companies have come to light, we’ve become more skeptical of the networks’ assertion of safety and security online.

In 2021, Instagram ran an internal experiment. An employee pretended to be a thirteen-year-old girl looking for weight loss tips on its platform. She was quickly led to content on anorexia and binge eating. Other former employees at the company have alleged that the board was aware of data suggesting 1 in 3 girls felt worse about their body after using the app. Despite this, corporate refused to do anything about it.

Kathleen Spence, the mother of Alexis Spence, has said that for years, parents have been “gaslighted by the big tech companies that it’s our fault.” However, with the onslaught of lawsuits against these companies, she hopes to send a strong message to social media sites: “You need to do better. I’m doing everything I can. You need to do better.”

Will These Lawsuits Succeed?

Credit: Shutterstock/ Kicking Studio

It’s uncertain how these lawsuits will play out. But one thing is for sure, social media is harmful to our children, and probably to us as well. If these suits succeed, it will be the end of the era of sweeping internet-age problems under the carpet. Hopefully, we will finally get proper regulations for these companies in order to protect us.

Until then, think about deleting your Instagram and TikTok from your phone. Even if you still have to keep them on your computer browser, it’s still one small step in the right direction.